Hello, and welcome back to our series on Voice Activation in Real time web applications. In the previous articles we looked at an overview of voice technology, created a webapp with text to speech using AngularJS, then added speech recognition support. In this article, we will add Siri-like voice commands so that our app can set alarms to wake up.

Dependencies

First up, we have the JavaScript code & CSS dependencies of our application.

<!doctype html> <html> <head> <script src="https://cdn.pubnub.com/pubnub-3.15.1.min.js"></script> <script src="https://ajax.googleapis.com/ajax/libs/angularjs/1.5.6/angular.min.js"></script> <script src="https://cdn.pubnub.com/sdk/pubnub-angular/pubnub-angular-3.2.1.min.js"></script> <script src="https://cdnjs.cloudflare.com/ajax/libs/underscore.js/1.8.3/underscore-min.js"></script> <script src="http://cdglabs.github.io/ohm/dist/ohm.js"></script> <link rel="stylesheet" href="//netdna.bootstrapcdn.com/bootstrap/3.0.2/css/bootstrap.min.css" /> <link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/font-awesome/4.6.3/css/font-awesome.min.css" /> </head> <body>

For folks who have done front-end implementation with AngularJS before, these should be the usual suspects. However, this time we've added one extra library called Ohm: the parser framework for creating our domain-specific command language.

The User Interface

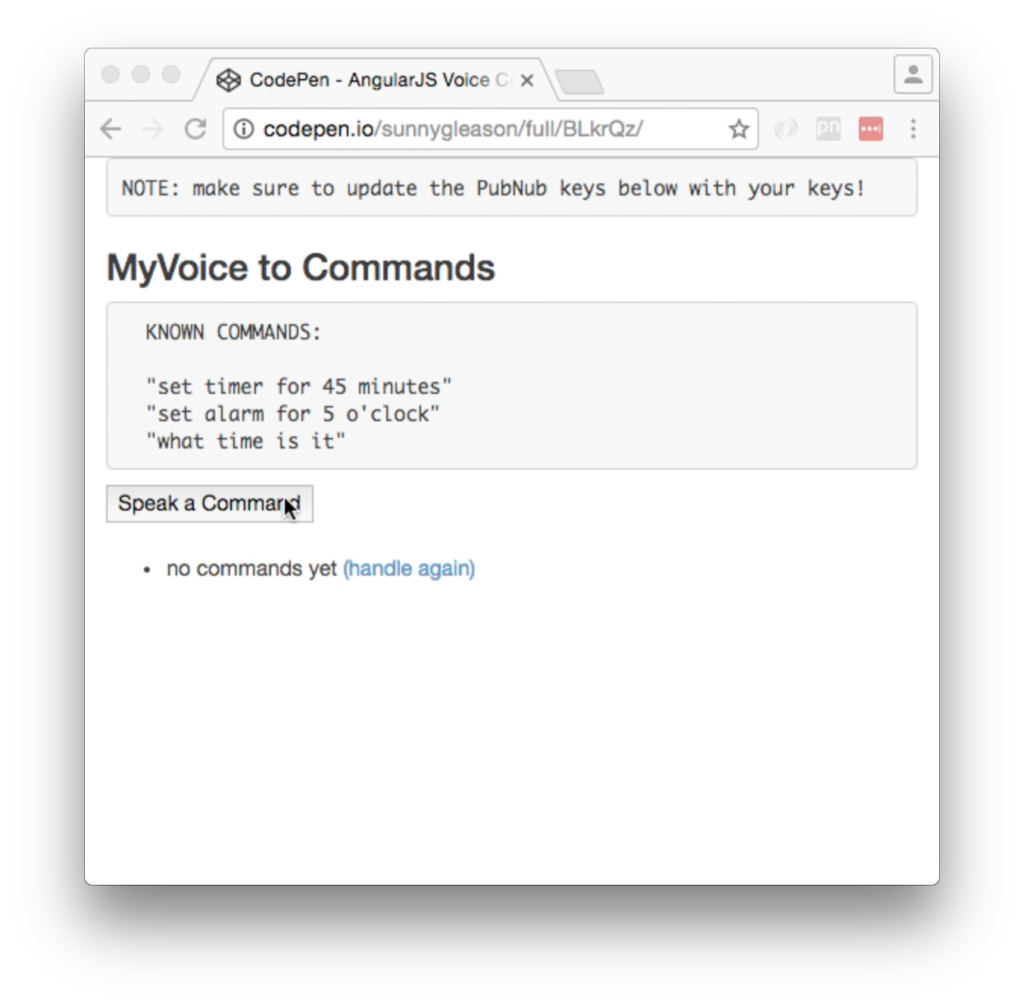

Here's what we intend the UI to look like:

The UI is pretty straightforward – everything is inside a div tag that is managed by a single controller that we'll set up in the AngularJS code. That h3 heading should be pretty self-explanatory.

<div class="container" ng-app="PubNubAngularApp" ng-controller="MySpeechCtrl"> <pre>NOTE: make sure to update the PubNub keys below with your keys!</pre> <h3>MyVoice to Commands</h3>

We provide some documentation of commands, plus a single button to dictate the command to send to the PubNub channel. Much simpler than keyboard input, for sure!

<pre> KNOWN COMMANDS: "set timer for 45 minutes" "set alarm for 5 o'clock" "what time is it" </pre> <input type="button" ng-click="dictateIt()" value="Speak a Command" />

Our UI consists of a simple list of commands. We iterate over the commands in the controller scope using a trusty ng-repeat. Each command includes a link to allow the user to re-handle the command (using our text-to-speech feature from the previous article).

<ul>

<li ng-repeat="command in commands track by $index">

{{command.text}}

<a ng-click="handleIt(command)">(handle again)</a>

</li>

</ul>

</div>

And that's it – a functioning real-time UI in just a handful of code (thanks, AngularJS)!

The Ohm Code

Ohm is an awesome framework for quickly creating domain-specific languages. We've seen it before in several PubNub blog articles about creating new languages. Check out the framework documentation to learn more.

There are two main parts to creating an Ohm language implementation: the grammar (expressed using Ohm syntax), and the semantics (expressed as a JavaScript map of expression names to function handlers). Here we create a small set of commands for working with time-related features.

<script type="text/ohm-js">

// An Ohm grammar for parsing voice commands

COMMAND {

command = timer_cmd | alarm_cmd | time_isit_cmd

timer_cmd = "set timer for " number " minutes"

alarm_cmd = "set alarm for " number " o'clock"

time_isit_cmd = "what time is it"

number = digit+

}

</script>

In the Ohm grammar above:

- Command : is either a timer command, an alarm command, or a “what time is it?” request.

- Timer_cmd : is the words “set timer for “, followed by a number, followed by ” minutes”.

- Alarm_cmd : is the words “set alarm for “, followed by a number, followed by ” o'clock”.

- Time_isit_cmd : is the phrase “what time is it”.

- Number : is a sequence of one or more digits.

This should make sense in our basic application. In a real-world implementation, we would likely have more specific data types, time formats and validation.

The next code creates the grammar object from the SCRIPT tag above, and configures the semantics of the language. Each phrase of the grammar has a handler function that returns a machine-processable representation of the data.

<script>

var gram = ohm.grammarFromScriptElement();

var sem = gram.semantics().addOperation('toList',{

number: function(a) { return parseInt(this.interval.contents,10); },

timer_cmd: function(_, number, _) { return ['timer_cmd', number.toList(), " minutes"]; },

alarm_cmd: function(_, hour, _) { return ['alarm_cmd', hour.toList(), " o'clock"]; },

time_isit_cmd: function(x) { return ['time_isit_cmd']; },

command: function(x) { return {text:this.interval.contents, cmd:x.toList()}; }

});

In the Ohm semantics above:

- Command : returns a map containing the original text and the parsed command.

- Timer_cmd : returns an array of the string “timer

- Alarm_cmd : returns an array of the string “alarm

- Time_isit_cmd : returns an array containing the single string “timeisitcmd”.

- Number : parses and returns a base-10 integer.

These handlers are very basic. In cases where the grammar is more complex (such as allowing additional time units and more detailed alarm times), there would potentially be conditional logic in the handlers to handle the different cases.

Overall, that's a pretty small amount of code to configure our own language. Not too bad!

The AngularJS Code

Okay! Now that we have our domain-specific command language out of the way, we're ready to dive into the AngularJS code. It's not a ton of JavaScript, so this should hopefully be pretty straightforward.

The first lines we encounter set up our application (with a necessary dependency on the PubNub AngularJS service) and a single controller (which we dub MySpeechCtrl). Both of these values correspond to the ng-app and ng-controller attributes from the preceding UI code.

angular.module('PubNubAngularApp', ["pubnub.angular.service"])

.controller('MySpeechCtrl', function($rootScope, $scope, Pubnub) {

Next up, we initialize a bunch of values. First is an array of command objects which starts out with a single command for testing. After that, we set up the msgChannel as the channel name where we will send and receive real-time structured data messages.

$scope.commands = [{text:"no commands yet"}];

$scope.msgChannel = 'MySpeech';

We initialize the Pubnub object with our PubNub publish and subscribe keys mentioned above, and set a scope variable to make sure the initialization only occurs once. NOTE: this uses the v3 API syntax.

if (!$rootScope.initialized) {

Pubnub.init({

publish_key: 'YOUR_PUB_KEY',

subscribe_key: 'YOUR_SUB_KEY',

ssl:true

});

$rootScope.initialized = true;

}

The next thing we'll need is a real-time message callback called msgCallback; it takes care of all the real-time messages we need to handle from PubNub. In our case, we have only one scenario – an incoming message containing the command to handle. We push the command object onto the scope array and pass it to the handleIt() function for text-to-speech translation (we'll cover that later). The push() operation should be in a $scope.$apply() call so that AngularJS gets the idea that a change came in asynchronously.

var msgCallback = function(payload) {

$scope.$apply(function() {

$scope.commands.push(payload);

});

$scope.handleIt(payload);

};

In the main body of the controller, we subscribe() to the message channel (using the JavaScript v3 API syntax) and bind the events to the callback function we just created.

Pubnub.subscribe({ channel: [$scope.msgChannel, $scope.prsChannel], message: msgCallback });

Next, we define the handleIt() function, which takes a command object and passes the text string to the Text-To-Speech engine. It should be familiar from the previous article.

$scope.handleIt = function (command) {

if (command.cmd && command.cmd[0] === "time_isit_cmd") {

window.speechSynthesis.speak(new SpeechSynthesisUtterance("the current time is " + (new Date()).toISOString().split("T")[1].split(".")[0]));

} else if (command.cmd) {

window.speechSynthesis.speak(new SpeechSynthesisUtterance("okay, got it - I will " + command.text));

} else {

window.speechSynthesis.speak(new SpeechSynthesisUtterance("I'm not quite sure about that"));

}

};

To make this basic app a bit more sophisticated, we break out three different cases. To handle a time request, we parse and speak the time portion of the current Date object's ISO representation. Otherwise, if the command parsed correctly, we acknowledge it (a real implementation would also perform the work of the command itself). Finally, if the command wasn't recognized by the grammar, we speak an error message.

Most importantly for the purposes of this article, we define a dictateIt() function that performs the speech recognition task. We instantiate a new webkitSpeechRecognition instance to perform the speech recognition, and populate its onresult() handler with our own custom logic. The first part of the handler is code to concatenate all the recognized text fragments. The second part parses the incoming text using the grammar, and turns it into a semantic representation (in the success case) or an error object (in the case the command didn't match). The third part calls Pubnub.publish() to send the recognized text out on the channel. Finally, we call recognition.start() to initiate speech recognition.

$scope.dictateIt = function () {

var theText = "";

var recognition = new webkitSpeechRecognition();

recognition.onresult = function (event) {

for (var i = event.resultIndex; i < event.results.length; i++) {

if (event.results[i].isFinal) {

theText += event.results[i][0].transcript;

}

}

var match = gram.match(theText.toLowerCase());

var result = match.succeeded() ? sem(match).toList() : {text:theText};

Pubnub.publish({

channel: $scope.msgChannel,

message: result

});

};

recognition.start();

};

We mustn't forget close out the HTML tags accordingly.

}); </script> </body> </html>

Not too shabby for just a bit over a hundred lines of HTML & JavaScript! For your convenience, this code is also available as a Gist on GitHub, and a Codepen as well. Enjoy!

Additional Features

Overall, we were very pleasantly surprised at how easy it was to create a tiny language for voice commands with Ohm and connect it to speech recognition features. There are a few areas where it might be nice to extend the capabilities:

- New commands: we can create new commands by adding phrases to the grammar specification and semantics, and adding them to the “or” clause of the top-level command expression in the grammar.

- New data types: we can create new phrases in the grammar for recognizing locations (such as “city state” pairs) and more complicated time expressions.

- More flexible grammar: we can mark words and phrases as optional to make it easier for users to speak commands naturally.

With just a few modifications, it's possible to approach the power of Apple's Siri, Google Now and Microsoft Cortana from your own application!

Conclusion

Thank you so much for joining us in the Voice Commands article of our Voice Activation series! Hopefully it's been a useful experience learning about voice-enabled technologies. In future articles, we hope to dive deeper into even more Speech APIs, platforms, and use cases for voice commands in real time web applications.

Stay tuned, and please reach out anytime if you feel especially inspired or need any help!