Auto Scaling vs Load Balancing: Key Differences

In modern cloud-native architecture, auto-scaling and load balancing are foundational for high availability and performance. While often mentioned together, they solve distinct problems. Misunderstanding their roles can lead to underutilized infrastructure, degraded performance, or spiraling costs.

Auto scaling controls when and how many servers to run; load balancing controls where traffic goes.

Used together, they ensure resilience, cost-efficiency, lower latency, better UX, leaner infrastructure, and smooth scaling under traffic spikes.

What They Do (Technically)

Auto Scaling

Automatically adjusts the number of compute instances based on traffic or performance metrics.

Used for: Capacity planning, cost optimization, and fault tolerance.

- Horizontal scaling: Add/remove instances (e.g., EC2, GKE nodes).

- Vertical scaling: Resize instance type (less common in auto-scaling setups).

Distributes incoming traffic across multiple instances or services.

Used for: Availability, latency reduction, fault tolerance.

- Layer 4 (TCP/UDP): Think of Layer 4 load balancing like a postal worker who routes packages based on ZIP codes—fast and efficient, but unaware of what’s inside. It directs traffic using IP and port, without knowing what the user tries to do.

- Layer 7 (HTTP/HTTPS): Content-aware (e.g., ALB, NGINX Ingress) is more like a concierge who reads the letter or package label to decide exactly where it should go, using application data like URLs, headers, or cookies to make smarter, content-aware routing decisions.

Production Scenario

Imagine a REST API backend running in Kubernetes on AWS EKS:

- Load Balancer: An AWS ALB fronts a Kubernetes Ingress, distributing traffic to backend pods.

- Auto Scaling: Kubernetes HPA (Horizontal Pod Autoscaler) increases pod count based on CPU or custom metrics like request latency.

Request Flow:

Client → ALB → Ingress Controller → Backend Pod

If traffic spikes from 500 rps to 10,000 rps:

- Load balancer prevents backend overload by distributing connections evenly.

- HPA detects rising CPU (>70%) or latency (>200ms), scaling pods from 5 → 50 in <1 min (based on limits and cooldowns).

- Cluster autoscaler may also scale EC2 nodes if pod scheduling is constrained.

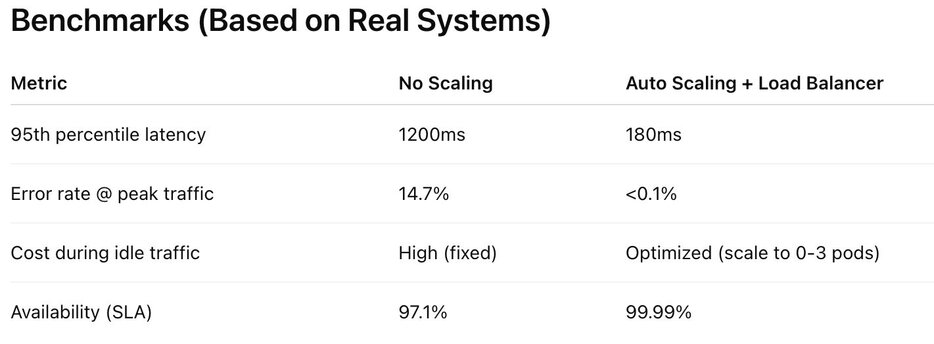

Source: Internal load test on a microservices app on EKS + ALB, with HPA + Cluster Autoscaler.

Best Practices

- Use load balancers even with a single instance to simplify failover and future scaling.

- Configure graceful termination and connection management draining to prevent 5xx errors during scale-in.

- Monitor with Prometheus + Grafana for latency, queue depth, and throttling.

- Tune HPA/ASG cooldowns and thresholds—default settings often underperform under burst traffic.

- Use readiness probes to avoid routing traffic to pods that are scaling up but not yet ready.

Load Balancing with PubNub

PubNub optimizes apps' load balancing by offloading real-time communication (e.g., presence, messaging, telemetry) to its globally distributed edge network. This reduces the need for your servers to manage long-lived connections like websockets or long polling.

- App Optimization: PubNub SDKs handle connection management, failover, and multiplexing across channels—reducing CPU usage, improving battery life on web & mobile, ensuring responsive UIs even under high load.

- Infrastructure Optimization: By shifting real-time traffic off your origin infrastructure, you avoid over-provisioning API gateways, integrations and backend services. This results in more predictable server-side loads and simplified horizontal scaling strategies.

Example: In a real-time collaboration tool, document edit events are streamed via PubNub, keeping backend servers focused on authentication and storage rather than maintaining thousands of active websocket connections.

Auto Scaling with PubNub

PubNub decouples event distribution from compute, enabling more efficient and event-driven autoscaling. Since clients receive data directly from PubNub, backend services only scale in response to business logic triggers (e.g., data writes, alerts), not from raw connection volume.

- App Optimization: Reduces latency and jitter for real-time events, allowing mobile and web clients to receive updates instantly without polling or manual refresh.

- Infrastructure Optimization: Backend services can scale reactively using serverless functions or containerized microservices, based on message events rather than client count.

Example: In a medical IoT monitoring platform, devices publish telemetry via PubNub. Cloud functions process only specific messages (e.g., threshold breaches), allowing autoscaling to respond to actual compute needs rather than continuous data ingestion.