Abusive Content Moderation with NLP

This is a guest post from Vadim Berman, CEO of Tisane Labs.

In any environment that connects multiple users in a single place, combatting abusive content should be top of mind. Cyberbullying, hate speech, sexual harassment, criminal dealings are all unfortunately common in these settings. That's why online communities need some sort of moderation.

But with the sheer volume of content being sent on a daily basis, moderators are overwhelmed. That's where natural language processing (NLP) comes into play. Specifically, in this post, we'll walk through all the different forms of content moderation using NLP, and uncover the underlying code that makes it all work.

WARNING. Below, we quote examples of abusive content, many sourced in the real world, that might be disturbing to some readers. Unfortunately, this is the Internet, and this is precisely what we’re trying to fix.

What Tisane Does and Doesn’t Do

In a few words, the Tisane API tags content that is likely to violate rules of conduct.

Even though there is a broad consensus over what constitutes abuse, some communities may disagree over details. We do not dictate the rules. We merely provide the tools to alert the moderators.

Think of us as a vendor of smoke alarms. If you decide to allow smoking in a particular area in your venue, simply do not install the smoke alarm there, or adjust its settings for your needs. Clearly, some venues allow smoking everywhere. And so, some online communities apply no moderation at all.

- Tisane API is not equipped to remove or silence the violators.

- We also do not aim to substitute human moderators. Our mission is to make their task easier.

- We also do not determine what’s right and what’s wrong.

- As far as our own beliefs go, arguing is fine. Punching each other in the face is not fine. Nothing even remotely controversial here.

- When it’s borderline, we don’t tag. The point is to establish presence, not to oppress.

Taxonomy of Abuse

Unlike, say, Jigsaw’s Perspective, Tisane differentiates between different types of abusive content. Every instance of abuse is assigned a type. In this post, we will discuss the different types of abuse we detect.

Personal Attacks and Cyberbullying

The most urgent need, in terms of moderation, is addressing personal attacks. The simple rule is, it’s your right to be negative about my ideas or what I post. It is not your right to aim your negativity at me as a person.

Of course, many people will find a reason to complain, but this is a nearly universally accepted rule online. And yes, sometimes trolls may target a topic close to the person without targeting them directly. But we must start somewhere. We stick to the definition that a personal attack is an attack on a participant in a conversation or a thread or people close to them (relatives and/or friends).

Instances of this type of abuse are flagged with the personal_attack type.

Let’s make the distinction clear.

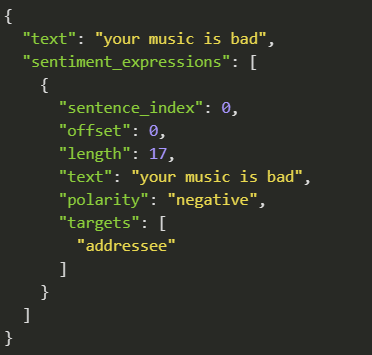

“Your music is bad” is a legitimate, even if negative, opinion:

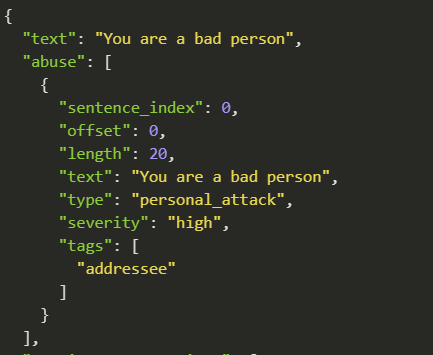

So is “you should feel bad.” However, “you are a bad person” is not legitimate, and so Tisane flags it as a personal attack:

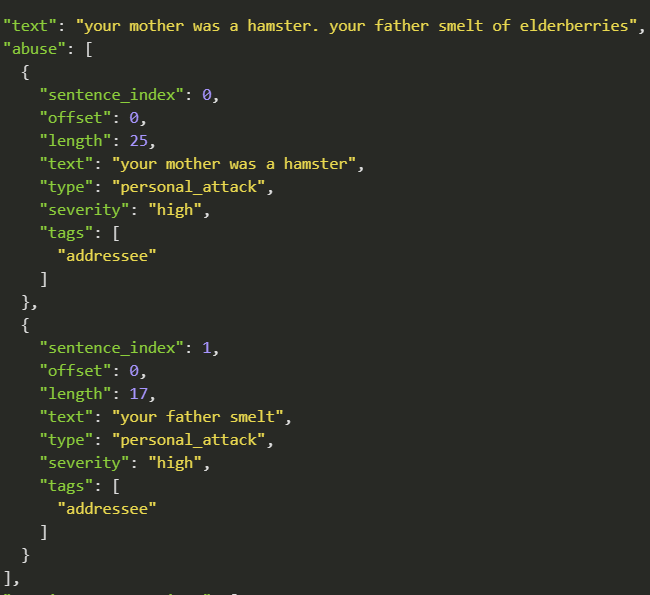

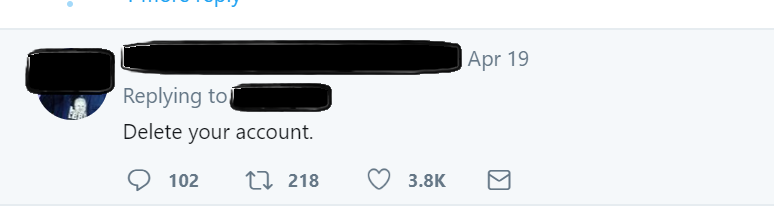

It’s not much of a challenge though, is it? Let’s try another cinematic classic:

While attacking or criticizing someone’s creations and ideas is bound to generate arguments, it is squarely within the bounds of the permitted discourse. However, black mouthing one’s loved ones certainly qualifies as a personal attack.

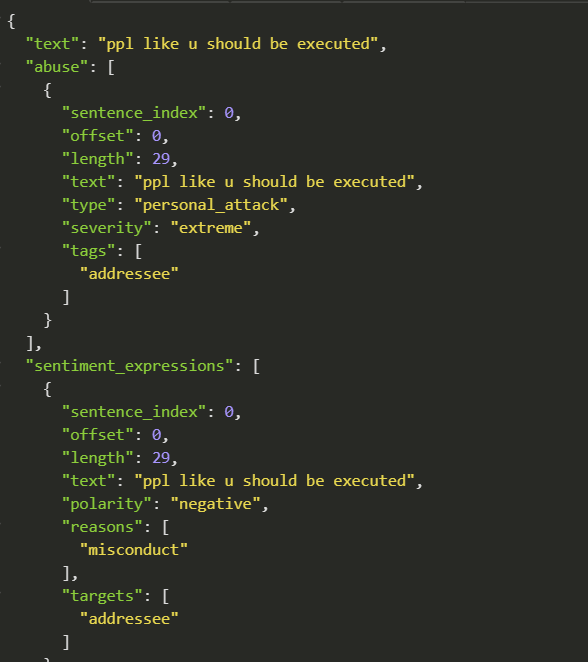

Now, a bit of the real-world abuse.

Crude stuff is easier to catch, of course:

or…

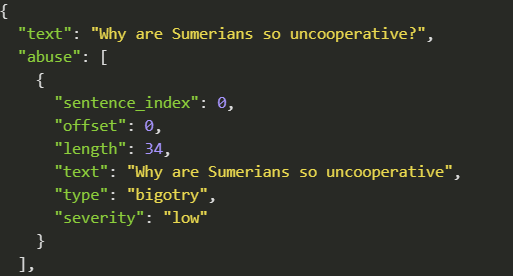

…but sometimes, the abusive message may not contain abusive language, such as in the example below.

Since Tisane focuses on the underlying meaning, it captures the personal attack intent here as well:

Abusive Content Moderation for Hate Speech

Next up, bigotry. Ethnicity/religious group/sexual minority X is evil, criminals, thieves, murderers, bad drivers, evade taxes, secretly plot to take over the world/poison my dog, and so on. Then, of course, there are plain slurs, which still can be found even in the mainstream social media, despite all the clean-up efforts. You get the picture.

Tisane assigns the label bigotry to this kind of content (a term more universally accepted than “hate speech”). While discussions between political divisions become increasingly heated, at this point, we only track abusive content aimed at protected groups in most countries, that is, race, ethnicity, religion, national origin, sexual preferences, or gender.

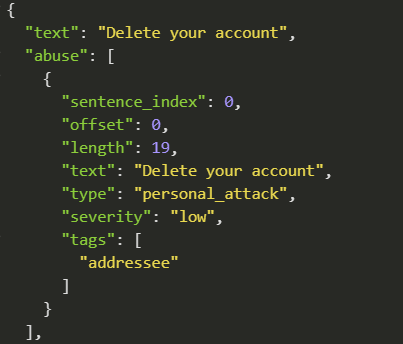

For the sake of reduction of possible misunderstanding, provocations, and people who prefer to attack before reading the entire article, we are going to use ethnicities that no longer exist. Let’s start simple.

Let’s make it a bit less direct.

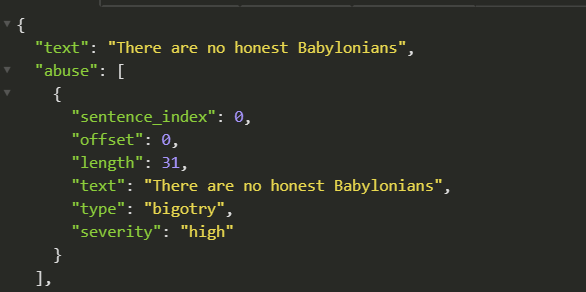

What about claims clumsily disguised as rhetorical questions?

Now let’s try the real-world bigotry.

(A great illustration why online communities need moderators, isn’t it?)

Sexual Advances

Most social networks are not dating sites. Being a woman between the ages of 20 and 45 means above average chances of being hit on virtually in an online community. Often, excessive attention of unwanted kind may become tiresome.

Like we said before, Tisane only tags things. Obviously, Tinder will never ban sexual advances. But networks like Quora or LinkedIn would (and many, many users believe they should). Tisane tags any compliments on the recipient’s appearance, unsolicited offers to engage in a relationship and/or sexual activities, inquiries about the relationship status, and the likes. The label is sexual_advances.

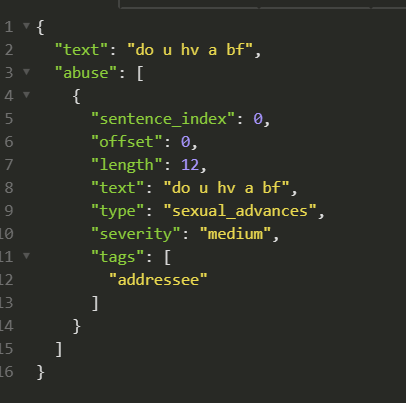

Sometimes, it is straightforward.

Sometimes, it may be an unwanted compliment.

In this particular case, note that the system may process the snippet differently, depending on the format of the content. When the format is set to dialogue, the utterance is assumed to be a crude compliment constituting sexual advances. Otherwise, it might be tagged for crude language, but no more.

Sometimes, it may be a very suggestive question.

Criminal Activity

While criminal activity (sans spam) rarely surfaces in the mainstream chat, some social media may be used to look for and close outright illegal deals. In addition, Darknet is home to drug trade, malware trade, child exploitation, and carding activities, which are actively monitored by law enforcement agencies.

Lawtech companies, law enforcement agencies, and the emerging crypto forensics (also known as cryptocurrency intelligence or blockchain forensics) industry often are forced to comb through tons of irrelevant records manually to find what they need.

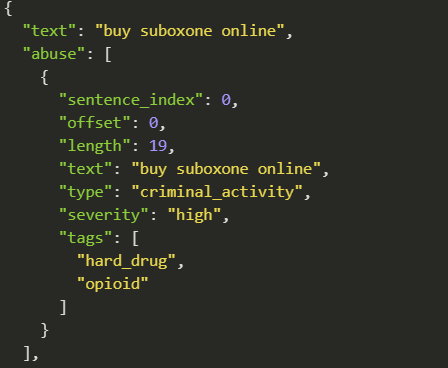

This is where Tisane helps to narrow down the search, and classify the criminal activity.

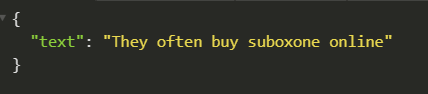

Like everything Tisane does, our tagging is not just about keywords. It is context-sensitive. Merely saying that someone else does it is not the same as advertising it.

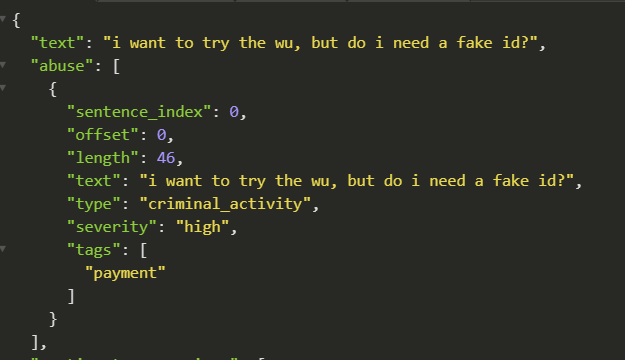

Not everything is about drug trade, of course. Identity theft constitutes large portion of the Darknet economy.

(“WU” means “Western Union”. The mention of the fake ID clearly shows it’s fishy).

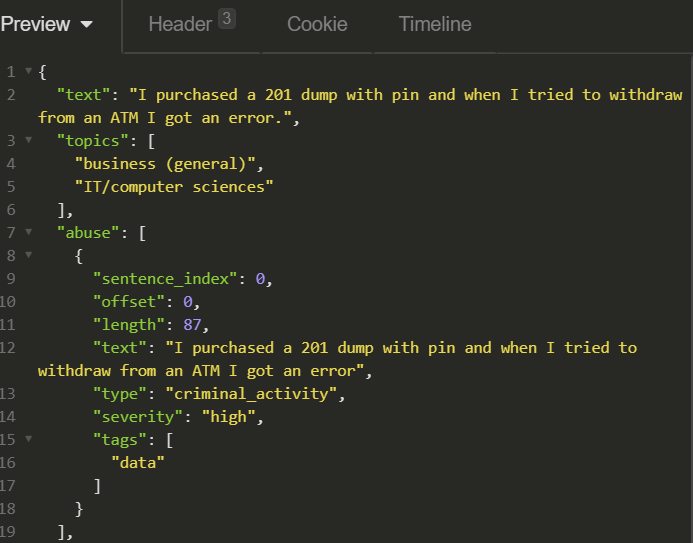

Carding (trade in stolen credit card data) is mainstream on the Darknet, too. Interestingly enough, just like the open, “normal” world of commerce, the criminal economy has its own parallel structures like customer service and technical support forums:

(A 201 dump is a type of a credit card info database.)

External Contact

Companies running freelance marketplaces, or booking portals, where all transactions must be performed via the portal, often have to deal with a situation where the vendors attempt to avoid paying the commission and cut the deal directly with the buyers. They either use the private messaging to provide their own payment terms, different from those they listed, or lure the user out to communicate using email or instant messaging.

To prevent, some companies use regular expressions to detect and mask emails and phone number. Trouble is, the users get craftier. Additionally, the phone number masking may also remove a legitimate piece of numeric data, like the reservation number. And sometimes, luring users out is done for more sinister motives, be it scamming or sexual exploitation. This way or another, oftentimes, the only solution is to keep track of attempts to establish external contact.

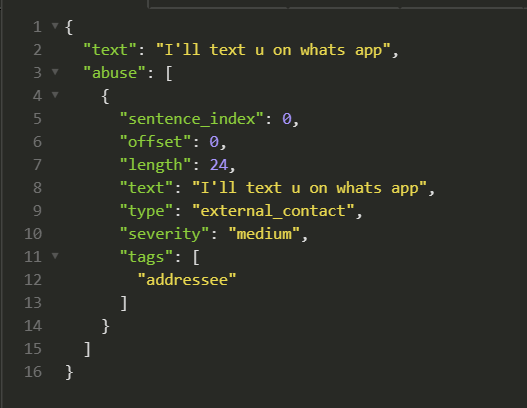

Tisane tags attempts to obtain contact details or solicitations to engage in external contacts. The abuse type value is external_contact. Tisane tags solicitations to engage in external contacts:

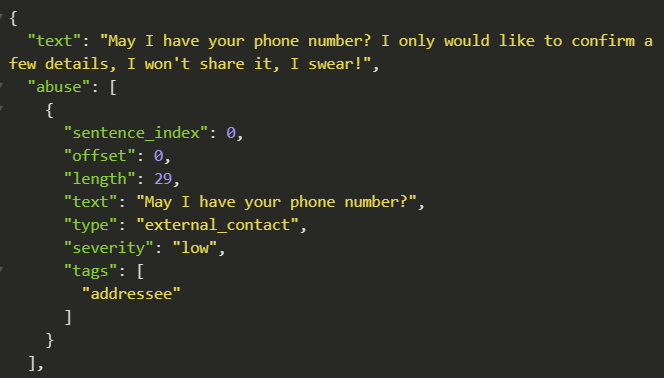

Or attempts to obtain contact details:

Integrating Tisane

As mentioned earlier, Tisane merely tags suspected violations. The classification capabilities, however, open new possibilities for moderators. We would like to float some proposals of new models, helping to balance the lack of manpower in the moderator team with the community’s well-being. All in all, we recommend adopting the model most suitable for the community:

- Alert the poster, suggesting to rethink what they are about to publish (“was that really necessary?”).

- Quietly alert the moderators, pinpointing the offending snippet.

- Possibly combining with the warning option, keep track of the frequency of abuses, with an automatic multiple strikes system. After a specified number of abuses, the account is temporarily suspended until a review of a human moderator. This is the best fit for a real-time chat.

- Provide an option for the other participants not to see content with particular types of abuse or even blacklist the abusive poster, unless explicitly white-listed (“you’re dead to me”). This is likely to be the most annoying option for the trolls, as they can no longer complain about the authorities misusing their power; in this scenario, it’s the other users that voluntarily took action against them.

- In case when a personal attack is detected, let the person being attacked moderate the message (“Don’t like the message? You can delete it”). If Tisane misfired, and no actual attack occurred, the other person will not react. If indeed a personal attack occurred, then the attacker will find themselves at the mercy of their victim. No effort is required of the moderators, and again, there is no reason to complain about the oppressive authorities. A more controversial option would be to extend this “crowd-moderation” to the bigotry/hate speech abuse, with users being mapped to protected groups.

- Censor the message completely or put it in custody until reviewed by a human moderator. As a general rule, we do not recommend this option, however, it may be required in certain environments (e.g. for legal or commercial reasons).

- Append a taunting message (e.g. “Sorry, I’m having a bad day today. Please ignore what I’m going to say next”). We also do not recommend this, as it interferes with the natural communication, and may even spur some abusive “experimentation”.

Everything is Good in Moderation: Social Impact

We stress again that the intended use of Tisane is to be an aid for moderators and analysts. However, with the ongoing debates about AI, ethics, and social media, we believe we should post some thoughts on it.

First, the impact on jobs. Today, moderators work in virtual sweatshops. They are demoralised, and the endless stream of the worst of human nature may be a real hazard to their mental health. The social communities often simply disappear because of excessive abuse which required financing a moderation team:

Dagbladet decided to create an online presence as soon as 1995. At first, there was no moderation for their comments. In 2012, they outsourced the moderation to a Swedish company. A year after, they decided to test with internal moderators, whom he sees as “debate leaders”. Some senior reporters as well as three younger journalists monitored the comments and interacted with readers. “It worked pretty well but it was a costly operation,” says Markussen.

Ability to provide a useful experience to the community members without spending a fortune will allow more communities to flourish, creating more jobs for moderators and editors. Rather than eliminating jobs, more capable moderation automation tools preserve them.

Then, the impact on the freedom of speech. Like we stressed before, it’s up to the moderator to decide whether a violation occurred. But, more importantly, proper moderation does not impede freedom of speech. It promotes freedom of speech. In the numerous examples when the communities closed down, how many online voices were silenced forever?

That is not counting the more prominent figures like Anita Sarkeesian and Wil Wheaton, deliberately targeted by trolls and forced to stop posting.

What do you prefer, human equivalents of xenomorphs slinging feces at everyone online, or a place when people who have something to say, can say it without fear of retribution?

Peeking into the Crystal Ball

Tisane is not a panacea. It is a tool which will, hopefully, fix some of the issues. Natural language processing in 2019 is not on par with the human linguistic capabilities, and certainly lacks understanding of all the nuances. However, Tisane was never meant to be a virtual Sherlock Holmes. It is at best a virtual garden-variety beat cop, or maybe even a service dog, pointing the attention of its master or a superior to where it believes an action must be taken. The subtler, more sophisticated attacks are not handled yet. We’re well aware of that.

But we’re happy with the situation. Trolls becoming craftier but fewer in number works for us. At this point, our objective is to raise the bar, and establish presence, in order to get rid of the troglodytes. We realize that if we succeed, a cat and mouse game will start, and that’s fine. Technologically, we’re only getting started.

On the other hand, the harder the trolls try to disguise their intent, the weaker the impact. If they have to misspell and confuse sentences to a barely readable form and resort to allegories, the reaction may be yawning more than distress.

Curious? Go ahead, visit our website, take a look at the demo, or sign up and start building cool stuff.